Hi.

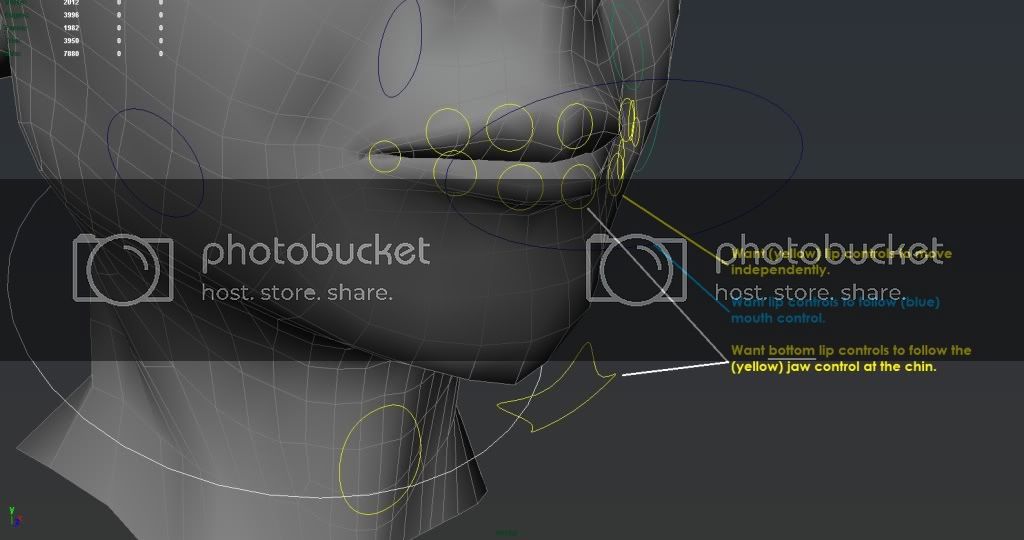

For the human kind of face rigs I’ve to build for our projects I take into consideration that if the jaw opens the actual facial behaviour would result in an overall movement of the flesh and muscles on our cheeks and even the nose.

This means I had to reproduce this face in a simple manner.

So if you have a jaw joint which is a child of the head joint as well as the rest of the face joints you need to drive these “free” joints when the jaw is being rotated.

Bones:

jnt_head:

|-> jnt_jaw

|-> jnt_mid_lowerLip

|-> jnt_mid_upperLip

|-> jnt_left_nostril

…

These are our kind of levels of movement influences. The jaw should influence the lower lips more than the upper lips and just a bit the nostrils and nose tip.

To reproduce this we are going to set four stages each stage consisting of a individual group:

ctrl_head:

|-> ctrl_jaw_grp

|-> ctrl_jaw_lower_grp

|-> ctrl_jaw_mid_grp

|-> ctrl_jaw_upper_grp

All of the groups need to have zeroed out rotations!

Now comes the clue!

ctrl_jaw_grp is the one that will be cotrolled by your nurbs curve by an orient or parent constraint.

This means, if the control is moved/rotated the group will move too. Now, we need to distribute different values/influences between the ctrl_jaw_grp and the rest jaw groups.

Therefore we create a multiply/divide node which is a auxiliary render node. It has an input(x,y,z) the multiplicator(x,y,z) and the resulting values(x,y,z). For each jaw group we create an individual multiply/divide node indicating the different influences stages/levels I mentioned earlier.

Let’s say we have these values as influences:

ctrl_jaw_grp -> 80% -> ctrl_jaw_lower_grp

ctrl_jaw_grp -> 50% -> ctrl_jaw_mid_grp

ctrl_jaw_grp -> 20% -> ctrl_jaw_upper_grp

That means we connect the rotations of ctrl_jaw_grp into the input of each multiply divide node and set the multiplicator to the right percentage:

80% = 0.8

50% = 0.5

20% = 0.2

Every node is set to multiply.

As you would guess we do connect the result of these nodes to the correct jaw groups.

Now you can rotate your control or ctrl_jaw_grp and see how the other groups follow concurrently.

It is important to parent(hierarchy in outliner) the right groups for each face control under these jaw-level-groups.

For example I have a chin group, every low lip group and more parented:

ctrl_head:

|-> ctrl_jaw_grp

|-> ctrl_chin_grp

|-> ctrl_jaw_lower_grp

|-> ctrl_mid_lowerLip_grp

|-> ctrl_right_lowerLip_grp

|-> ctrl_left_lowerLip_grp

|-> ctrl_jaw_mid_grp

|-> ctrl_left_mouthCorner_grp

|-> ctrl_right_mouthCorner_grp

|-> ctrl_jaw_upper_grp

|-> ctrl_left_nostril_grp

|-> ctrl_right_nostril_grp

|-> ctrl_nose_grp

That’s all.

I have to add that each controller is nested in several groups, the tiers I was earlier referring to. This way I still have more individual control over one control. But the groundwork for the jaw opening is done with this rig’s approach.

Conclusion:

Mouth open -> distributed movement among the controls from top to bottom of the human face.